The site crawl rate

Yandex robots constantly index sites by crawling them and downloading pages to the search database.

The site crawl rate is the number of requests per second that the robot sends to your site.

Default setting

The optimal crawl rate is calculated using algorithms so that the robot can load the maximum number of pages without overloading the server. This is why the Trust Yandex option is turned on by default on the page in Yandex.Webmaster.

Changing the crawl rate

You may need to reduce the crawl speed if you notice a large number of robot requests to the server where your site is located. This can increase the server response time and, as a result, reduce the loading speed of site pages. You can check these indicators in the Yandex.Metrica report.

Before changing the crawl rate for your site, find out what pages the robot requests more often.

- Analyze the server logs. Contact the person responsible for the site or the hosting provider.

- View the list of URLs on the page in Yandex.Webmaster (set the option to All pages). Check if the list contains service pages or duplicate pages, for example, with GET parameters.

If you find that the robot accesses service pages or duplicate pages, prohibit their indexing in the robots.txt file using the Disallow directive. This will help reduce the number of unnecessary robot requests.

To check if the rules are correct, use the Robots.txt analysis tool.

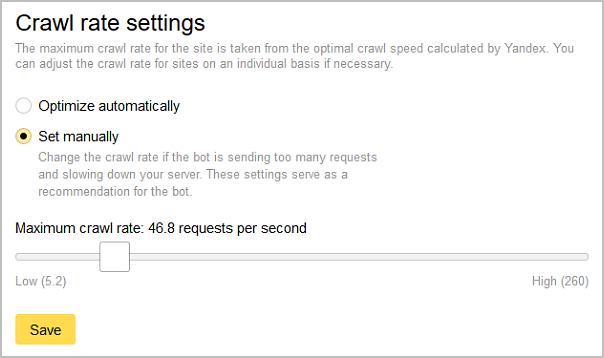

- In Yandex.Webmaster, go to the page.

- Turn on the Set manually option.

- Move the slider to the desired position. By default, it is set to the optimal crawl rate calculated for your site.

- Save your changes.